This page describes my attempt to use an ARM computer as my main desktop system.

ARM desktop computers existed 29 years ago. Some of them even ran UNIX. I never personally owned one of those machines - they were too expensive - but I did have one of these on my desk when I worked at ARM back in 1991-92.

Since then ARM has been very successful in mobile and embedded applications, but not so much for general-purpose "desktop" computers. For a long time the limiting factor was video output; it was hard to drive a display with an acceptable resolution. That has changed in the last few years as the resolutions required for ARM's mobile and embedded applications have risen. It's now easy to get small ARM boards that can deliver video at HDTV resolutions and beyond.

My previous desktop was a decade-old VIA x86 box which was on its last legs; it normally failed to power on until several attempts had been made. I was holding out for a usable ARM system to replace it. Many of the options were deficient in some way, e.g. missing a crucial interface, but I've now taken the plunge.

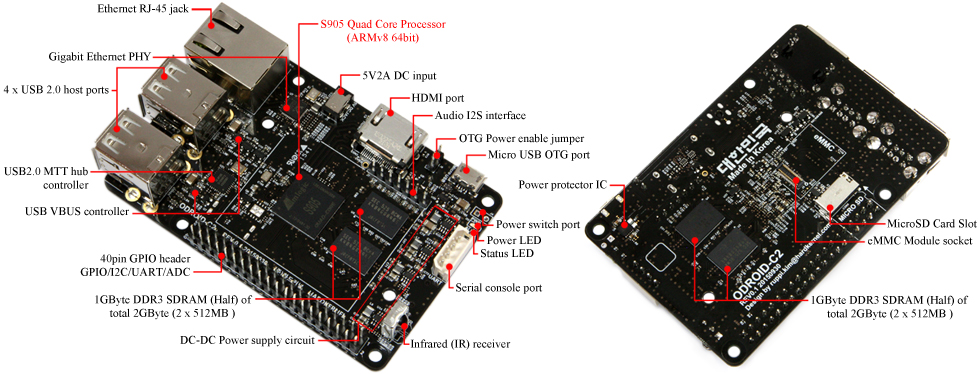

The device that I've finally chosen is the ODROID-C2, by Hardkernel:

The board is based on the Amlogic S905 SoC; this is targeted mainly at set top boxes. It has four 1.5 GHz 64-bit processor cores. The fab process is apparently 28 nm. It's worth noting, however, that these are in-order Cortex A53 cores, not A57 or A72 with out-of-order execution, so instructions-per-clock is more like a 32-bit Cortex A9, not an A15 (see e.g. Wikipedia's comparison of 64-bit ARM cores).

When I bought the board it was advertised as a "2 GHz" board, and it seems that Hardkernel actually believed this to be true. Apparently they had been deceived by Amlogic. The implementation of clock speed control is hidden in a signed binary-blob executed by a separate microcontroller within the SoC, and the deceit was revealed only by people running benchmarks. Though we don't yet know the full story, it feels like "Amlogic can't be trusted, don't buy their products".

It's still fractionally faster that the 1.2 GHz on the DragonBoard 410c and the Pine A64. It also has more RAM than those boards.

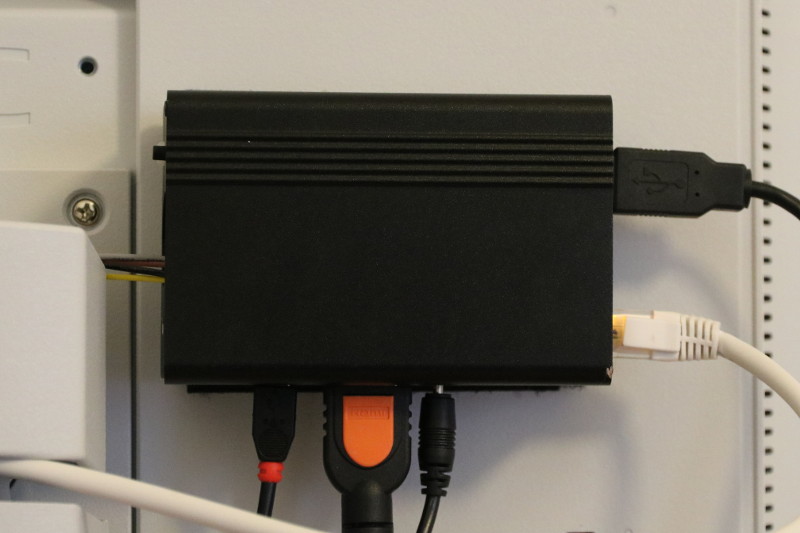

Physically the board has almost the same footprint as some models of Raspberry Pi, which is convenient for things like cases. It also has a GPIO expansion connector with a similar pin-out. One difference is the position of the power connector. I bought a metal case from eBay, and have fixed it to the back of my monitor with velcro. I had to drill a new hole for the power plug.

Hardkernel is based in Korea, but they have distributors in several countries. In the UK

the board costs only £37.89 £48.77 £53.65, inc VAT, but you have to add an eMMC module for another

£18.05 to £54.13 £19.51 to £73.16 £23.40 to £78.04, depending on capacity, and you'll probably also want a

power supply, case and perhaps a serial port cable for a few more pounds. (The £ is worth rather

less than it was when I first wrote this.)

To learn more about the board, see:

The two weakest aspects of the hardware spec are the 2 GB of RAM and the lack of SATA. So far, 2 GB seems to be fine for me: I can compile a kernel with -j4 and browse the web at the same time, and it doesn't swap. Compiling Boost did make it swap, but it wasn't thrashing, and I could probably have avoided that by reducing the concurrency a bit. I have enabled ZRAM swap, i.e. swapping to compressed RAM, which seems like a better option than swapping to flash. It seems that 300 MB of RAM is permanently reserved at boot for the graphics system; that can be released if you're running headless.

The lack of a SATA interface was more of a concern to me - but Hardkernel have some graphs of the performance of its eMMC implementation, showing about 120 Mbytes/s. I've not tried to confirm that throughput, but I've not seen any problems with filesystem speed. You can get eMMC modules of different sizes from 8 to 128 GB; I chose a 32 GB one. (Much of my storage is over NFS.)

The other main interfaces are USB 2.0, Gigabit Ethernet and HDMI. Unlike some other boards, the ethernet is built into the SoC rather than being attached to USB.

Power consumption is, as you would expect, very modest. The power, measured at the wall, is around 3 W. Of course there isn't a fan. When idle the reported SoC temperature is about 38 C (with room temperature 18 C, and the board in a case with not much ventilation). When busy, for example compiling a kernel, I see it rise to about 75 C. It uses the "hotplug" cpufreq governor, which adjusts the CPU frequency between 100 MHz and 1.5 GHz and turns cores on and off as the load varies.

Note that there is a jumper on the board that you must remove if you're using the dedicated power connector, rather than the micro-USB connector, to supply power. Not removing this jumper will increase the power consumption significantly.

Debian has supported ARM since 1996. Today Debian offers three ARM variants, two 32-bit and one 64-bit. Despite this, the number of ARM devices directly supported by Debian is small. Hardkernel have chosen to support Android and Ubuntu, but not Debian. There are, however, some filesystem images available containing bootloader, kernel and minimal Debian installation; using one of these as a starting point it is not too difficult to get a reasonably "vanilla" Debian installation working.

I chose to start with an image called "ODROBIAN", but this turned out to be a mistake as the one person working on it disappeared from the forum. It has been reported that he is "focusing on his career". But there are a couple of other images which should serve the same purpose. My approach has been approximately:

At the end of this process, the system comprises a kernel that I've compiled myself from Hardkernel's git, and regular Debian packages. There is very little ODROID-specific "magic" to cause confusion in the future. (See below for a few of the custom things that are needed.)

It seems to be worthwhile using Debian Stretch ("testing") rather than Jessie ("stable") as there have been some useful improvements to Debian on ARM64 since Jessie was released. Of course there is also a danger of problems using the testing release, but on balance it seems to be the right choice.

The 64-bit processor is also able to run 32-bit ("armhf") binaries. Debian now has support for installing both 32- and 64-bit packages on the same system; you just have to

# dpkg --add-architecture armhf

and then install packages with ":armhf" appended to their names. It is possible that for some kinds of code the 32-bit version will be quicker than the 64-bit version, but I've only installed 32-bit packages where the 64-bit version was not functional for some reason, primarily firefox-esr:armhf.

The main thing that I'm not using from Debian is the kernel. I've now built my own based on Hardkernel's git tree, with some local tweaks mostly to the configuration. This is a 3.14 kernel (from March 2014, i.e. two years old) to which Amlogic's drivers for their peripherals have been added. 3.14 was a "long term support" release, which means that it had mainline support until August 2016. That has now expired so secuity bugs etc. are not being fixed.

There is no need for an initrd on a device like this with a custom kernel - the main purpose of an initrd is to allow distributions to ship kernels without having to build in drivers for every possible device required to mount the root filesystem. In my view, having an initrd just provides extra complexity when building/installing a new kernel and gives you one more place for misbehaviour to hide during boot. I'm therefore not using an initrd with my custom kernel. Making this work needed a couple of changes; the kernel command line can't refer to the root device by UUID, and there is some display setup that I have moved out of the initrd and into /etc/init.d/frambuffer-start. See below for these files.

Hardkernel's kernel configs seem to miss out quite a few modules; the ones I noticed were "SCSI Generic" (needed for USB CD/DVD drives) and USB printer support. They've probably now fixed those particular ones. I don't know why they haven't just taken a config from a general-purpose distribution like Ubuntu or Debian where these things must surely all be turned on.

If you want to experiment with new kernels, you need to think in advance about how you'll recover if your new kernel doesn't work. The most common option, e.g. on an x86 box with GRUB or LILO, is to keep your old known-good kernel around and use the bootloader to select it when booting. But this requires a bootloader that you can interact with. There are various options on boards like the ODROID-C2 where the bootloader (U-Boot in this case) doesn't have an interactive console:

I decided on another approach: the supplied U-Boot has a driver that can read from the GPIO pins, and I've connected a push button to one of them. When it boots, if this button is pressed it boots a "safe" version of the kernel. (The button is visible on the top of the left side of the case in the photo above.)

This is made more difficult than it need be because U-Boot's "hush" shell doesn't seem to support multi-line if statements (please correct me if I'm wrong; the documentation for hush is poor), nor any substitute such as a goto statement. The approach I've used is to do all of the "safe" initialisation first, then boot the safe kernel if the button is pressed, and then at the bottom of the script load and boot the experimental kernel. My boot.ini to implement this is linked below.

Pressing this button will recover from brickage, but it won't help you discover why your experimental kernel didn't work; you'll probably still want access to the serial console for that. But it may be sufficient to just record what is output to it. To do this, I'm using one of my trusty 11-year-old slugs (running Debian Etch). It appends everything that it receives from a USB serial cable to a file, which I can view over NFS after rebooting the ODROID-C2.

Getting a working display was harder than it should have been.

I have a 1600x1200 monitor, which although old is still perfectly functional, and I had hoped to use that with this system. But I did know before buying that 1600x1200 was not one of the supported screen resolutions listed on the website.

I asked about 1600x1200 on the forum. Apparently, supporting new screen modes involved Hardkernel asking Amlogic to add appropriate magic numbers to their kernel driver - and a previous batch of additional screen modes had taken a long time to be implemented.

With some regrets, I bought a new monitor - an NEC MultiSync EA244WMi, with a resolution of 1920x1200 (for more than twice what the ODROID-C2 and all its accessories had cost). I plugged it in - and it didn't work. It did work at 1920x1080, but not at the native 1920x1200. It turns out that the monitor, and single-link DVI in general, can only support 1920x1200 using "reduced blanking" video timings, while the board was outputting the non-reduced-blanking version with a too-high pixel clock.

I wasn't going to buy yet-another monitor, so I started to look at the kernel code related to video modes. It was a mess. There were about eight places where all the supported video modes were enumerated in huge case statements or tables. I really got the impression that the person who had implemented this was being paid per line of code written. The actual content of these case statements was often an unexplained series of "magic numbers" to be written into device registers. I dived in and added a new mode for reduced-blanking 1920x1200 and deciphered what the magic numbers did - they weren't as obfuscated as I had feared.

It didn't work, though. To cut a long story short, I eventually discovered that somewhere a 16-byte fixed size buffer was being used to store the name of the video mode, and I had named my new reduced blanking mode by adding "RB" to the end of the mode name - pushing it beyond that limit. Then the hardware seemed to be generating the right signal but the framebuffer and X were only using a 1280x768 portion of it. A few weeks later I discovered this was due to yet another table of all the video modes that needed to be extended, hidden inside the initrd. Long before finding that I had given up and simply modified the existing 1920x1200 mode to use the reduced-blanking timings, rather than making it a new mode, and that works perfectly.

I posted my patch for this to the forum and Hardkernel picked it up, also adding various other new modes in their next kernel release. But hilariously they stripped all of my explanatory comments from the new code, leaving all the "magic numbers" incomprehensible!

I normally have only three peripherals attached - a monitor, keyboard and mouse. The mouse is the only one that has not caused any trouble.

The problem with the keyboard was that keys would sometimes seem to get "stuck", and auto-repeat until the next key was pressed. The "key up" event was being lost somewhere. I would see this happen maybe once or twice each hour, most often with the return key.

It seems that this is a long-standing bug that has also affected previous ODROID devices and the Raspberry Pi because their SoCs all use the same USB block, "dwc_otg". When a low-speed USB device is connected via a hub to a high-speed host, the low-speed messages are broken up by the hub into "split transactions". Apparently the SoC hardware and/or its driver don't handle split transactions well, with a very high rate of interrupts, and some messages being lost.

Apparently the Raspberry Pi people have an improved driver that fixes (or at least improves) this issue. Attempts to port this to ODROID have not yet been successful.

One plausible way to fix this is to avoid split transactions by forcing the USB bus to which the keyboard is connected to run at full speed rather than high speed. This can be done by connecting a USB 1.1 hub to the board's USB OTG port and connecting the keyboard to that.

Note that finding a genuine USB 1.1 hub is not easy! You'll find many that are described as, for example, "high-speed USB 1.1", which is contradictory. I had to get one a few years ago for a work project, and I ended up with one that looks like this; they are available on eBay:

This does seem to work. I still see very high interrupt rates (1,000 per second for one USB port and 10,000 per second for the other), and I did once have the keyboard stop working entirely followed eventually by a spontaneous reboot, but I no longer suffer keys getting "stuck".

A bluetooth keyboard and mouse would be worth considering - as long as you can find a USB bluetooth dongle that doesn't suffer its own problems.

A very important consideration when choosing a board like this is the quantity and quality of support from both the manufacturer and the community of other users. My experience has varied considerably among the various ARM and other boards that I have used over the years.

ODROID does well, thought it's not quite the best that I've ever encountered; that would probably be the community around the NSLU2. There is an active forum which seems to have a few "smart" people - as well as occasional trolls and people asking "Can it run MacOS?". Most significantly, a least some of the Hardkernel employees post on the forum both to answer questions and to pick up suggestions.

Full schematics are available. A datasheet for the S905 SoC has recently been released; it's clearly a redacted version, and I don't know how useful it would really be in practice if you wanted to write a device driver for something. (The contrast with the excellent datasheets for the IXP420 chip used in the NSLU2 is striking.)

A group whom I don't believe are connected with Hardkernel are working on mainline kernel support for at least some parts of the S905 (see http://linux-meson.com/doku.php). Their progress is impressive and mainline support for at least ethernet and eMMC, i.e. enough to run headless, can be expected in an official kernel release soon. But there are lots of Amlogic drivers for display-related things, and I don't know if they will ever implement enough to run a desktop.

One factor in the strength of a support community is simply its size, and in this respect the ODROID-C2 does well; in a survey by Hackerboards the C2 was ranked second most popular.

There is of course also the excellent Debian ARM community, though they don't often seem to get involved with particular boards.

There are various other problems that I've not yet found a satisfactory work-around for:

Normally a computer will power off its monitor when there has been no activity for a few minutes. It signals the message to power off to the monitor using a protocol called DPMS. There is no support for this in Amlogic's drivers, and it's unknown whether the hardware is capable of it, so an attached monitor will just show a blank screen. If you're concerned about energy efficiency but don't turn your monitor off with its power switch, this could eliminate the savings from using a low-power computer!

Luckily for me, my new monitor has a "human detection" feature; it has some sort of IR sensor on the front, so it can shut itself down autonomously when there is no-one sat in front of it.

It is possible to entirely disable the HDMI output, and a hack has been posted on the forum to do this to blank the screen. The disadvantage is that the monitor will display a "no signal" message for a while in this case before actually powering off.

When I bought the board I knew that 3D acceleration was not available, despite the SoC including a Mali 450 GPU. What I didn't know was that 2D acceleration was also not available, despite the SoC including a 2D rectangle copying engine - and while a 3D driver has since become available, there is still no 2D driver nor much chance of getting one.

The main issue is apparently that while the CPU and GPU have MMUs between them and the memory, the 2D unit ("ge2d") doesn't, so it would be necessary to use physically-contiguous RAM for everything. More realistically the 3D GPU could be used for 2D acceleration; this is what the X-replacement "Wayland" would do - but we don't yet have a Mali driver that Wayland will talk to. It seems that ARM have released a suitable Mali driver, but it has not yet been ported to this device.

I don't know whether this is a kernel bug specific to this board, or a generic kernel bug (in which case it may have been fixed since 3.14), or something else - but vmstat shows bogus values in its blocks in/out columns:

procs -----------memory---------- ---swap-- -----io---- -system-- ------cpu----- r b swpd free buff cache si so bi bo in cs us sy id wa st 1 0 7428 171092 183576 1012704 0 0 0 0 9988 667 15 7 78 0 0 1 0 7428 171092 183576 1012704 0 0 0 0 9956 1195 14 14 73 0 0 0 0 7428 170976 183576 1012704 0 0 4294967293 0 9963 2706 41 18 40 0 0 0 0 7428 170976 183576 1012704 0 0 0 0 9910 1089 3 1 95 0 0 0 0 7428 170976 183576 1012704 0 0 0 0 9898 877 8 9 84 0 0

My experience with USB has been that no-one implements it properly. (In contrast to, for example, ethernet.) In this case, the board has a micro-B socket for its OTG port where it should have a micro-AB. The difference is that the micro-AB socket will accept a micro-A plug, which is what is needed to use the port as a host, while a micro-B will not.

It appears that they've done this because they've copied the various smartphones that have also incorrectly fitted micro-B sockets. Not to worry though, as cable manufacturers have responded by selling "impossible" cables such as micro-B to micro-B (with the ID pin earthed at one end) and micro-B to full size A socket.

Modern filesystems, i.e. anything since about 2001, include a feature called "journaling" that makes them much less vulnerable to corruption if they are not cleanly unmounted, e.g. if the device is not shut down properly or if the power is unplugged. Unfortunately, Hardkernel have chosen to disable this very useful feature in the filesystem images that they distribute.

It is easy to re-enabled it though:

# tune2fs -f /dev/mmcblk0p2

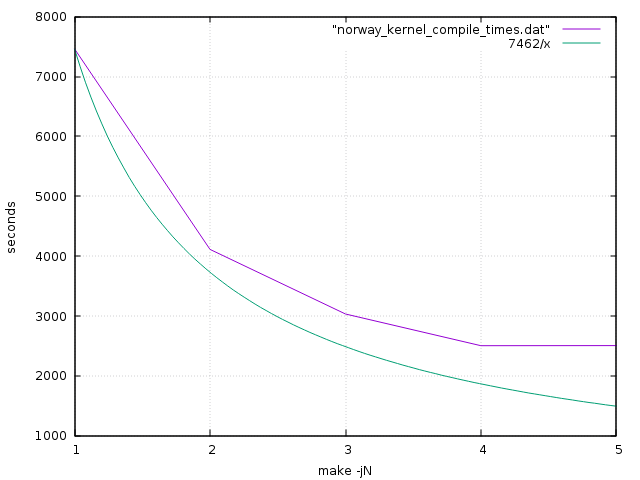

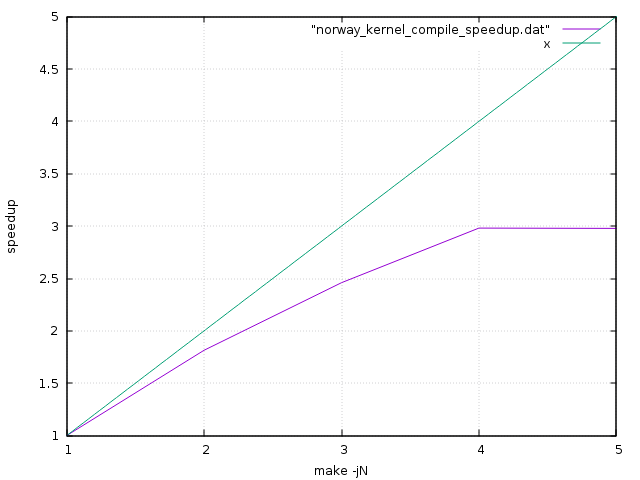

Here are a couple of graphs to illustrate how performace scales with concurrency, to give an idea of the effectiveness of the quad-core architecture. These are kernel compilation times; the first shows the absolute compilation time (and in green the time if perfect speedup were achived), and the second shows speedup.

Clearly there is one or more serial bottleneck, e.g. memory, caches, filesystem, etc. that prevents perfect speedup, but the scaling is not bad.

$ sysbench --test=cpu --cpu-max-prime=20000 run

sysbench 0.4.12: multi-threaded system evaluation benchmark

Running the test with following options:

Number of threads: 1

Doing CPU performance benchmark

Threads started!

Done.

Maximum prime number checked in CPU test: 20000

Test execution summary:

total time: 23.9030s

total number of events: 10000

total time taken by event execution: 23.8971

per-request statistics:

min: 2.38ms

avg: 2.39ms

max: 10.87ms

approx. 95 percentile: 2.40ms

Threads fairness:

events (avg/stddev): 10000.0000/0.00

execution time (avg/stddev): 23.8971/0.00

$ sysbench --num-threads=2 --test=cpu --cpu-max-prime=20000 run

sysbench 0.4.12: multi-threaded system evaluation benchmark

Running the test with following options:

Number of threads: 2

Doing CPU performance benchmark

Threads started!

Done.

Maximum prime number checked in CPU test: 20000

Test execution summary:

total time: 12.1583s

total number of events: 10000

total time taken by event execution: 24.3008

per-request statistics:

min: 2.38ms

avg: 2.43ms

max: 22.49ms

approx. 95 percentile: 2.45ms

Threads fairness:

events (avg/stddev): 5000.0000/9.00

execution time (avg/stddev): 12.1504/0.00

$ sysbench --num-threads=4 --test=cpu --cpu-max-prime=20000 run

sysbench 0.4.12: multi-threaded system evaluation benchmark

Running the test with following options:

Number of threads: 4

Doing CPU performance benchmark

Threads started!

Done.

Maximum prime number checked in CPU test: 20000

Test execution summary:

total time: 6.5236s

total number of events: 10000

total time taken by event execution: 26.0162

per-request statistics:

min: 2.38ms

avg: 2.60ms

max: 52.48ms

approx. 95 percentile: 2.48ms

Threads fairness:

events (avg/stddev): 2500.0000/28.06

execution time (avg/stddev): 6.5040/0.02

mbw is a simple single-threaded memory bandwidth test.

$ mbw 200 AVG Method: MEMCPY Elapsed: 0.12769 MiB: 200.00000 Copy: 1566.234 MiB/s AVG Method: DUMB Elapsed: 0.12759 MiB: 200.00000 Copy: 1567.560 MiB/s AVG Method: MCBLOCK Elapsed: 0.06017 MiB: 200.00000 Copy: 3323.711 MiB/s

I have mixed feelings about my ODROID-C2. Its performance and hardware features are sufficient for my needs. There are some rough edges though, and the deceit around the maximum clock speed has left a very bad taste.

(I'll qualify that by saying that my needs are actually quite modest. I mostly need terminals, a compiler, and a web browser. I do sometimes do some basic graphics work of which the most demanding is the panorama-stitching program Hugin; I've not tried to use that on this board yet, and I imagine it will not be a great experience.)

The state of device driver support on this and similar devices is depressing. Things are actually getting worse rather than better. In particular, the tendency for important hardware blocks to be "secret" is increasing, and the shortening life cycle of the SoCs means that manufacturers have moved on to their next thing and forgotten about yours while you're still trying to use it.

64-bit ARM Linux support, in the kernel and in Debian, seems to be excellent.

Hardkernel seem to be a decent company and they are working hard to support the device.

Before the clock speed debacle I was sufficiently happy that I bought a second device, which is being used as a router (it has a single ethernet port, but I'm using it with two VLANs to separate internal and external traffic); it is massively over-spec for this role considering my rather slow internet connection speed, but it's easy for me to have two devices with only one set of idiosyncrasies to discover.

Now I just need to get used to all the things that have changed in the software packages I use! GIMP's save vs. export thing is my first nasty surprise.

Eventually you may need to run an x86 binary, for some reason. In my case this was when I wanted to build u-boot, and ironically Hardkernel distribute the u-boot source with an x86 binary to do the required code signing.

Luckily, it was remarkably easy to do this using qemu; I just installed the qemu-user package and it worked. This was largely because the executable I wanted to run was statically linked; if you want to run dynamically linked executables you'll need to jump through some more hoops.

The hardware has Mali 3D graphics, and binary-blob drivers are available - but there are a couple of important provisos. Firstly, the drivers are only for OpenGL ES, not for "desktop" OpenGL. So most OpenGL applications that you might want to run are still not going to be accelerated. (There is an OpenGL to OpenGL ES translation library called glshim, but it supports only a subset of OpenGL.) Secondly, if you do install the OpenGL ES drivers the speed of the 2D desktop (i.e. moving windows around, scrolling etc.) deteriorates significantly.

If you want to try the OpenGL ES drivers, you need to:

$ git clone https://github.com/mdrjr/c2_mali_ddx $ cd c2_mali_ddx $ sudo apt-get install xserver-xorg-dev $ ./configure $ make -j4 $ sudo cp src/.libs/mali_drv.so /usr/lib/xorg/modules/drivers/ $ sudo cp src/xorg.conf /etc/X11/

You can tell if this has worked by running es2_info; it will report "GL_RENDERER: Mali-450 MP". But actually you'll notice that it has "worked" sooner than that because you'll find your 2D desktop is like treacle.

Here are some of the files that I've needed to add or modify. Note that these copies may not be entirely up-to-date with things like the kernel.